Implementing Big Data

for Get More Insight with Customer through Sentiment Analysis

Introduction

Nowadays, people love to use public

online transportation rather than their own vehicle. The reasons are people

nowadays love time efficiency, privacy, and also energy. Time goes by, people

feel unsatisfied with the services that given by the company such as in

Indonesia they have Gojek, Grab, and Uber as big player in public online

transportation. But, they give insight with customer personally. They do not

turned customers complain into advertisement to make customer feel they are heard.

How to solve problem?

In this

case, we are going to crawling word data from Twitter with keyword “@GrabID”. Because

people complaining via twitter mentioned official account of Grab on @GrabID. I

use orange application, because it is simple to use.

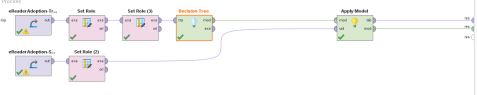

Here is

the data of @GrabID and it will become our data set.

From the picture above, we can

see there are 1462 documents with 3927 words. We can process this data into the

word of bag. Like this

Conclusion

After we know the big picture of

our customers complain, we can give more insight to our customer. For Grab

company, they can make advertisement more efficient and give more insight to

their customer.