Abstract

This analysis was made to fulfill the assignment of big data subject and to know the unique information from the data example.

The Attributes:

- User_ID: A numeric, unique identifier assigned to each person who has an account on the company’s web site.

- Gender: The customer’s gender, as identified in their customer account. In this data set, it is recorded a ‘M’ for male and ‘F’ for Female. The Decision Tree operator can handle non-numeric data types.

- Age: The person’s age at the time the data were extracted from the web site’s database. This is calculated to the nearest year by taking the difference between the system date and the person’s birthdate as recorded in their account.

- Marital_Status: The person’s marital status as recorded in their account. People who indicated on their account that they are married are entered in the data set as ‘M’. Since the web site does not distinguish single types of people, those who are divorced or widowed are included with those who have never been married (indicated in the data set as ‘S’).

- Website_Activity: This attribute is an indication of how active each customer is on the company’s web site. Working with Richard, we used the web site database’s information which records the duration of each customers visits to the web site to calculate how frequently, and for how long each time, the customers use the web site. This is then translated into one of three categories: Seldom, Regular, or Frequent.

- Browsed_Electronics_12Mo: This is simply a Yes/No column indicating whether or not the person browsed for electronic products on the company’s web site in the past year.

- Bought_Electronics_12Mo: Another Yes/No column indicating whether or not they purchased an electronic item through Richard’s company’s web site in the past year.

- Bought_Digital_Media_18Mo: This attribute is a Yes/No field indicating whether or not the person has purchased some form of digital media (such as MP3 music) in the past year and a half. This attribute does not include digital book purchases.

- Bought_Digital_Books: Richard believes that as an indicator of buying behavior relative to the company’s new eReader, this attribute will likely be the best indicator. Thus, this attribute has been set apart from the purchase of other types of digital media. Further, this attribute indicates whether or not the customer has ever bought a digital book, not just in the past year or so.

- Payment_Method: This attribute indicates how the person pays for their purchases. In cases where the person has paid in more than one way, the mode, or most frequent method of payment is used. There are four options:

- Bank Transfer : payment via e-check or other form of wire transfer directly from the bank to the company.

- Website Account : the customer has set up a credit card or permanent electronic funds transfer on their account so that purchases are directly charged through their account at the time of purchase.

- Credit Card : the person enters a credit card number and authorization each time they purchase something through the site.

- Monthly Billing : the person makes purchases periodically and receives a paper or electronic bill which they pay later either by mailing a check or through the company web site’s payment system.

Steps:

1. Open the Rapid Miner with a new blank space

2. Input data of 7 - eReaderAdoption-Training.csv and 7 - eReaderAdoption-Scoring.csv

Here is our data example

3. Designing the process

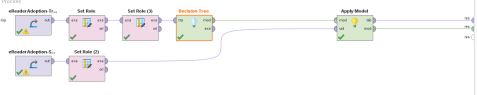

The first thing we need to do is drag the Training and Scoring Data from the repository to the process page, and pick the set role from the operations menu, like the picture below:

We set the attribute name is “User_ID” and for the target role is “id” on the “Set Role” operation like the picture above.

After setting the set role of the user_id, add one more “Set Role” process from the operation menu, like before and take it to the Training Process line.

Re-set again the “Set Role” but the attribute name is “eReader_Adoption” and for the target role is “label” like the previous one.

4. Designing decision tree

Take the “Decision Tree” process from the operation menu and choose the “gain_ratio” for the criterion. It means we use the basic Decision Tree (C4.5). After put the “Decision Tree” on the Training line we put again the “Apply Model” process it also from the operation menu.

Results:

- Frequent Decision Tree

- Regular Decision Tree

- Regular Decision Tree Description

Conclusion:

After we collect all the data above, we have information about people behavior of payment method. People mostly seldom to adopt a new payment method, and mostly people using website account and bank transfer as their payment method.